Master these mental models to foolproof your AI solutions

Companies often underestimate AI’s complexity, leading to failed implementations and missed opportunities. Adopting these mental models can help address common failures.

Everyone is in the weeds of implementing AI in their organizations. In fact, reports show that AI implementations are soaring, while deployments of AI solutions are not.

In other words, everyone is experimenting, but not releasing AI solutions into the wild.

Yet others have rushed into deploying their solutions prematurely without considering the consequences, like when a chatbot accidently offered a brand new Chevy for $1. Others invested in releasing standalone AI-enabled hardware devices, like Rabbit R1 or Humane pin, that attempted to replace existing devices rather than integrate their AI solutions to enhance them and failed.

These are just some examples of what can go wrong when AI is deployed too quickly without proper planning.

Trying to ride the hype tide, some are rushing their projects without considering the bigger picture can lead to problems that can be avoided.

I propose a more strategic, long-term approach, grounded in mental models.

Mental models are frameworks we unconsciously rely on to make decisions.

Learning mental models help us break down complex challenges, see patterns, and make better decisions by considering various perspectives.

I want to share three frameworks in this article that helps to shape your decision-making about AI.

Enjoy!

The Pitfalls of Short-Term AI Thinking

One of the root causes of failed AI initiatives is short-term thinking. Many companies view AI as a quick "tool" that will solve complex problems overnight. ChatGPT, Claude, Gemini are capable of so much "out of the box" and those solutions cost so little, it inadvertently sparks ideas to solve problems in new ways. (Or ... maybe even spark ideas for how to "create" problems to solve with AI?)

I am convinced that some of the use cases are derived through conversations with these agents.

In fact, I've seen this firsthand with use cases that are:

all over the place (i.e. not strategic)

apply AI where traditional software can do the job (i.e. unrealistic expectations)

expect AI to work for low-impact use cases that are high in complexity to implement (i.e. not worth implementing)

or, downright unrealistic projects that aren’t worth pursuing (this speaks for itself)

I've been observing the AI adoption trends in the market for some time and this is what I'm seeing.

There's often a significant overestimation of AI's immediate capabilities and underestimation of the complexity of implementing AI solutions.

While AI is indeed powerful, but it's not always plug-and-play. While some off-the-shelf solutions exist, they may be limited in their capabilities and/or require customizations.

That said …

AI requires careful planning.

If you are building custom AI solutions, consider training, fine-tuning, and integration to deliver meaningful results. Companies seem to expect instant miracles ( ... there are plenty of those in the post-ChatGPT release era) and are setting themselves up for a disappointment.

The true cost of AI implementation is also frequently underestimated. The price tag goes well beyond the initial investment in technology. It includes data preparation, infrastructure upgrades, staff training, and ongoing maintenance – expenses that are often overlooked in the rush to adopt.

Scaling AI solutions beyond the proof of concept (POC) stage presents unexpected challenges. What works in a controlled environment may face significant hurdles when deployed across an entire organization.

Lastly, the pressure to show quick returns on investment (ROI) can lead to myopic decision-making.

Mental Models for Strategic AI Implementation

Long-term, strategic thinking is a must in AI.

As a guide, we can employ several mental models that help reframe our approach to AI adoption to help with this paradigm shift.

Let's dive in ...

Second-Order Effects: Looking Beyond the Immediate Outcomes

The Second-Order Effects mental model pushes us to think about how AI’s initial success might generate deeper, less obvious changes over time. The first order of effects is usually easy to predict: an AI recommendation system, for example, might lead to increased product sales by personalizing the customer experience. But the second-order effects—how the system might reshape customer behavior or even alter the competitive landscape—are often harder to foresee.

A profound example to see Second-Order Effect comes from a company we all know very well - Amazon.

See ...

When Amazon launched its Prime service in 2005, the goal was simple: offer customers fast, free shipping for an annual subscription fee. It was a no-brainer for frequent shoppers. The first-order effect was clear—Prime memberships soared, and Amazon’s revenues skyrocketed as consumers flocked to take advantage of two-day shipping.

But the second-order effects were much more profound. The convenience of fast shipping led to a shift in consumer expectations, not just on Amazon but across the entire retail industry. Shoppers began to expect faster delivery times everywhere, putting immense pressure on other retailers to match Amazon’s logistics infrastructure.

The consequences rippled out further: the demand for quick delivery drove up competition in warehousing and logistics, increased packaging waste, and placed strain on warehouse workers, leading to criticism over working conditions. Additionally, brick-and-mortar stores struggled to compete with the convenience, contributing to the closure of many small businesses and the decline of shopping malls.

What started as a simple membership program to boost sales ended up fundamentally reshaping how people shop and the retail landscape itself.

When implementing AI-driven personalization or customer service solutions, as some examples, companies must consider how it might reshape customer expectations.

Could instant AI-driven responses in one area lead customers to demand similar speed in other areas?

Will it pressure competitors to adopt similar strategies, changing the industry standard?

Remember: second-order effects are not always negative, but they require planning and adaptability to manage the long-term impact.

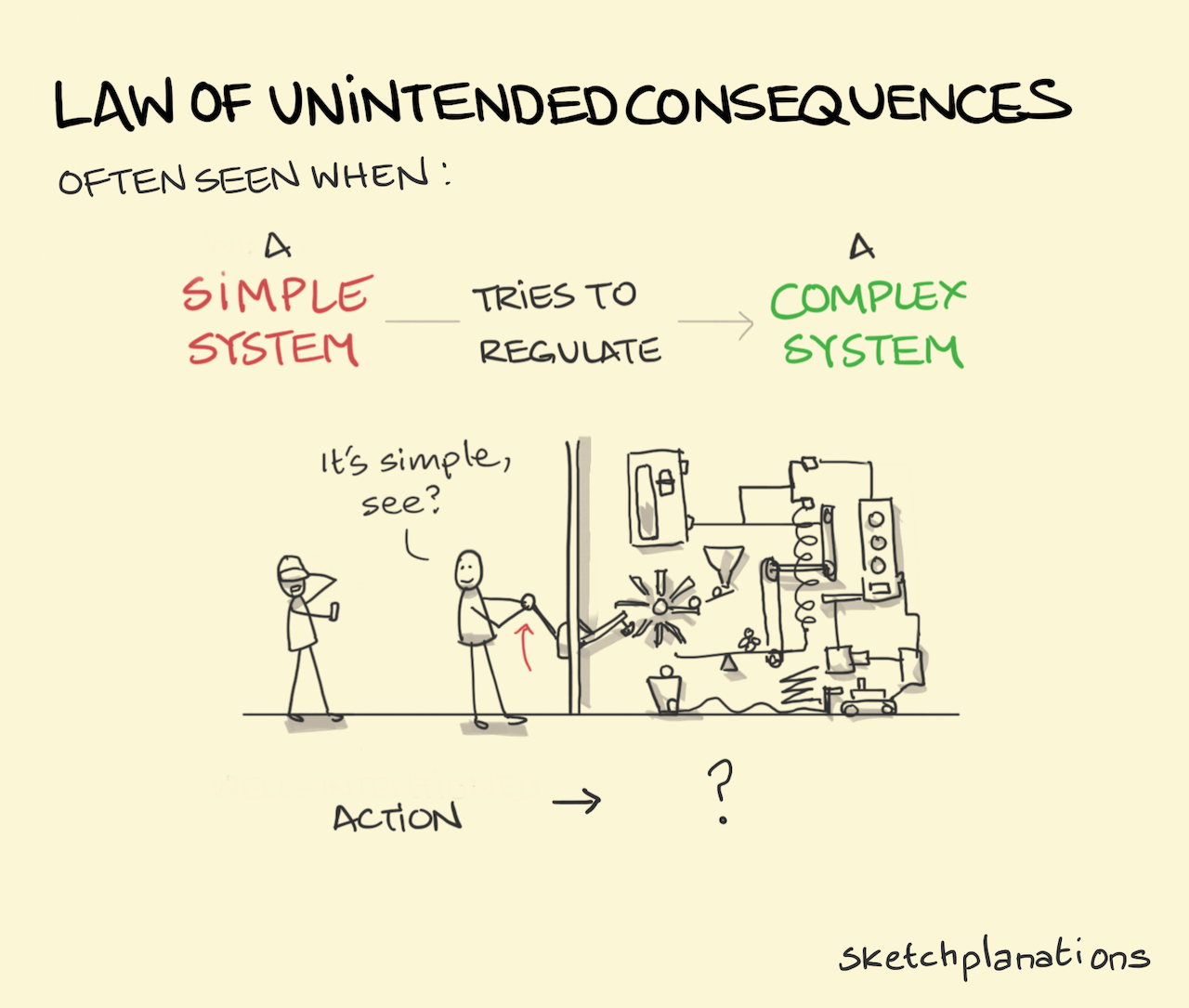

While Second-Order Effects help us anticipate how initial AI successes can ripple into larger, long-term changes, the Law of Unintended Consequences takes this idea further by focusing on the unexpected—and often overlooked—impacts that can arise when deploying AI. Unlike second-order effects, which are more predictable, unintended consequences can be completely surprising, affecting areas far beyond the initial scope of the AI solution.

The Law of Unintended Consequences: Understanding AI’s Broader Impact

In the rush to implement AI solutions, many businesses fail to think through the full range of potential outcomes. **The Law of Unintended Consequences** reminds us that actions often produce effects beyond what we initially anticipate. When applied to AI, this model encourages leaders to think about the cascading impacts an AI system might have, not just on the problem it was designed to solve, but on other areas of the business as well.

But this law is not unique to AI and you can see it applied in action in history of business.

For example ...

In the early 1970s, Ford was racing to compete with imported Japanese cars, which were cheaper, lighter, and more fuel-efficient. Ford’s solution was the Pinto, a small, affordable car that could roll off the assembly line quickly. Executives were laser-focused on keeping costs low and bringing the car to market fast. But there was a problem: the gas tank.

In their rush to production, Ford engineers discovered a flaw—the gas tank was positioned in such a way that a rear-end collision could cause it to explode. Ford’s internal cost-benefit analysis concluded that fixing the issue wasn’t worth the expense. They went ahead with the launch, believing the risk was low.

But it wasn’t. As reports of deadly explosions mounted, Ford found itself facing a firestorm—both literally and figuratively. Lawsuits, bad press, and public outrage followed, ultimately costing the company far more than the initial savings. The Pinto, meant to be a quick win, became one of the most notorious product failures in history.

Applying The Law of Unintended Consequences can help organizations avoid these pitfalls by considering not just the immediate benefits but also the potential long-term effects of AI implementation.

For example, deploying an AI-powered chatbot to handle customer inquiries might seem like an obvious way to reduce operational costs and improve response times. However, without considering the broader implications, this decision can lead to unintended consequences:

What level of complexity of questions can these chatbots handle before leading to a decline in customer satisfaction?

What's the impact on human capital and will they have enough volume of customer inquiries to keep their motivated to do their job?

The Law of Unintended Consequences shows how even the most well-planned AI initiatives can lead to unforeseen results, especially when considering broader organizational or societal impacts. But what happens when an AI solution doesn’t just produce unexpected results but actually worsens the very problem it was meant to solve?

This brings us to the Law of Perverse Consequences, which takes this concept further, highlighting how a seemingly well-designed solution can backfire, creating more harm than good. Unlike unintended consequences, where the effects may be neutral or mixed, perverse consequences tend to actively undermine the original goals, making the situation worse.

Law of Perverse Consequences: Avoiding the Backfire Effect

The Law of Perverse Consequences teaches us that sometimes, solutions to problems can make the situation worse. This is especially important in the context of AI, where even well-designed systems can have unintended negative effects if they aren’t implemented with care. The “perverse” part comes into play when a solution not only fails to fix a problem but exacerbates it, leading to worse outcomes than if the solution hadn’t been implemented at all.

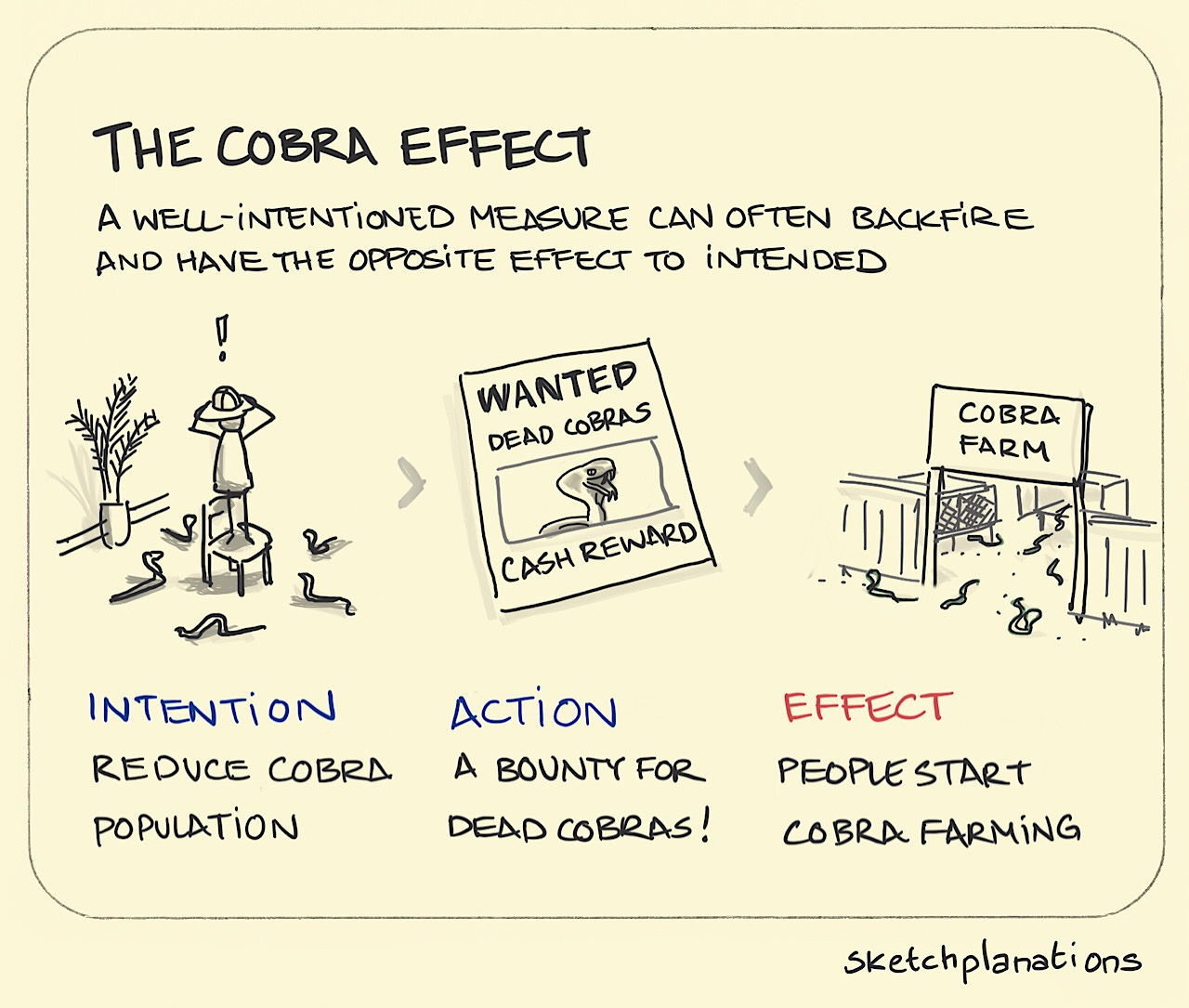

To see this law in action, consider the following situation that is now commonly referred to as "The Cobra Effect."

In British colonial India, officials faced a deadly problem—too many cobras. To solve this, the government offered a bounty for every dead cobra. Initially, it seemed to work. People brought in cobra skins, and the population appeared to dwindle. But soon, clever locals realized they could breed cobras, kill them, and collect the bounty. Instead of reducing the number of snakes, the bounty system inadvertently encouraged breeding.

When the government realized what was happening, they ended the program. But now, with no incentive to kill the cobras, people simply released the ones they had bred.

The result?

More cobras than ever before.

The Law of Perverse Consequences teaches us that well-intended solutions can backfire if the system they operate within is not fully understood.

In AI, implementing automation to eliminate human bias, for instance, can inadvertently embed historical biases into decision-making processes if the data used to train the AI reflects those biases.

Shifting to a Long-Term AI Strategy

To avoid falling into this trap, companies need to take a more comprehensive approach to AI deployment. Instead of viewing AI as a quick fix, organizations should consider a strategic, longer-term approach that entails:

1. Data Security and Privacy: As AI systems grow and handle more sensitive information, data security becomes paramount. Long-term planning must include evolving security measures to protect against future breaches that could lead to financial and reputational damage.

2. Data Management: High-quality data is the fuel for AI, especially for Retrieval-Augmented Generation (RAG) solutions, that are one of the most commonly adopted AI approaches. Consider how you'd handle version control of the documents, data accuracy and relevance.

3. Model Maintenance and Adaptability: AI models are not static solutions; they require continuous monitoring, retraining, and updating. Plan for regular reviews and adjustments to keep your AI relevant and effective as your business and data evolve.

4. Risk Management: AI brings risks of bias, toxicity, and regulatory non-compliance. Implement regular bias audits, ethical oversight, and compliance checks to ensure your AI operates safely and responsibly over the long term.

5. Change Management: AI adoption transforms workflows and roles within an organization. Plan for training, upskilling, and integrating AI solutions into day-to-day operations in a way that feels natural and assistive to users.

6. Anticipating Feedback Loops: As users interact with AI over time, unexpected behavioral patterns may emerge. Design AI systems that are adaptive and resilient to these feedback loops, preventing negative user behavior while continuously improving the system's value.

7. Workflow Integration: For long-term success, AI must seamlessly integrate into existing workflows. Focus on creating AI solutions that enhance rather than disrupt operations, making users' work easier without adding unnecessary complexity.

8. Cost Management: Monitor the long-term ROI of your AI initiatives. Ensure that your AI investments continue to drive impact without overrunning budgets.

9. Scalability: Plan for how your AI solutions will scale across the organization. Consider the infrastructure and resources needed to expand successful pilots enterprise-wide.

10. Talent Development: Building in-house AI expertise is crucial for long-term success. Invest in developing your team's AI skills and foster a culture of continuous learning to keep pace with rapid advancements in AI technology.

By addressing these areas in your long-term AI strategy, you can create a foundation for sustainable AI adoption that drives lasting value for your organization.

How to play the long game in AI Adoption

By applying these mental models and addressing the key areas outlined in our long-term strategy, we can shift from viewing AI as a tool for short-term gains to understanding it as a catalyst for profound, long-term transformation. This new perspective encourages a more holistic thinking, considering not just the immediate impacts of AI adoption, but also the downstream consequences.

Rather than focusing solely on immediate wins, organizations should evaluate AI initiatives based on their long-term effects across the entire business landscape.

The true power of AI lies not in quick fixes or surface-level optimizations, but in its potential to drive fundamental business transformation over time.

The mental models of Second-Order Effects, The Law of Unintended Consequences, and The Law of Perverse Consequences serve as essential tools in this journey.

They remind us that even the most well-intentioned AI initiatives can have ripple effects, unexpected outcomes, or unintended backfires.

But by applying these models, we can anticipate challenges, design more resilient solutions, and create contingency plans before issues arise.